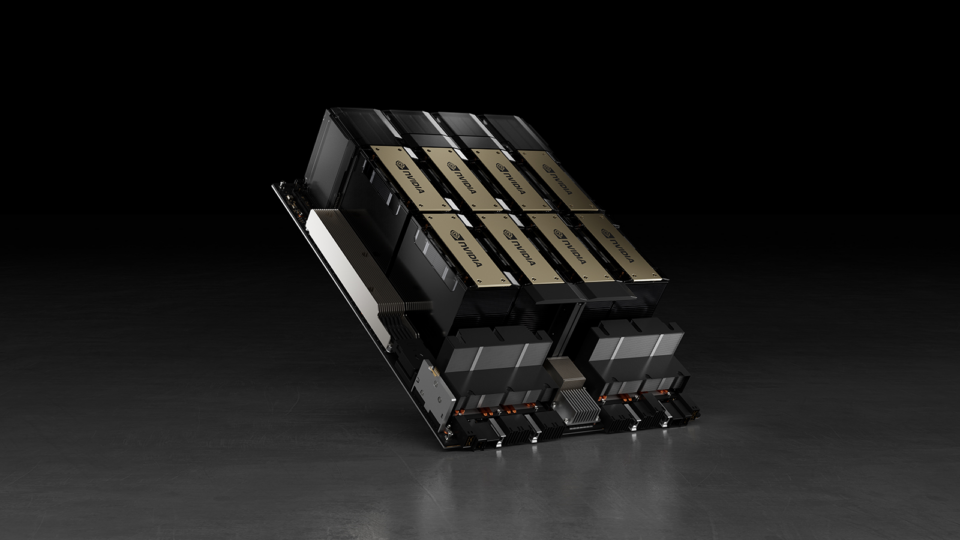

10 Nvidia H100 Tips For Faster Performance

The Nvidia H100 is a powerhouse of a GPU, designed to tackle the most demanding tasks in fields like AI, HPC, and data analytics. To get the most out of this incredible hardware, it’s essential to understand how to optimize its performance. Here are 10 expert tips to help you squeeze every last bit of speed out of your Nvidia H100:

1. Leverage Tensor Cores for AI Workloads

The H100 features advanced Tensor Cores, which are specifically designed to accelerate AI and deep learning workloads. By utilizing these cores effectively through frameworks like TensorFlow or PyTorch, you can significantly boost the performance of your AI models. Always look for opportunities to offload computations to the Tensor Cores, especially in tasks involving matrix multiplication, which is a core component of neural network training.

2. Optimize Memory Usage

The H100 boasts an impressive amount of memory, but efficient memory management is still crucial for optimal performance. Ensure that your applications are optimized to minimize memory allocation and deallocation, as these operations can introduce significant overhead. Additionally, consider using techniques like memory pooling to reduce fragmentation and improve data locality.

3. Utilize Multi-Instance GPU (MIG) for Enhanced Resource Allocation

Nvidia’s MIG technology allows a single H100 GPU to be partitioned into multiple independent instances, each with its own resources. This feature can dramatically improve resource utilization and reduce idle time. By allocating specific resources to different workloads, you can ensure that each task gets the resources it needs without wasting valuable GPU cycles.

4. Enable NVLink for Faster Data Transfer

The H100 supports NVLink, Nvidia’s high-speed interconnect technology that enables faster data transfer between the GPU and other system components. Enabling NVLink can significantly reduce data transfer times, especially in applications that require frequent communication between the GPU and CPU or other GPUs. This is particularly beneficial for workloads that involve large datasets or complex simulations.

5. Leverage CUDA 11 and Ampere Architecture Features

The H100 is built on Nvidia’s Ampere architecture, which introduces several performance-enhancing features. Make sure to update to the latest version of CUDA (at least CUDA 11) to take full advantage of these features. CUDA 11 provides numerous optimizations and tools tailored for the Ampere architecture, including improved compiler optimizations and new libraries that can accelerate specific tasks.

6. Profile and Optimize Your Applications

Understanding where your application spends most of its time is crucial for optimization. Use profiling tools like Nvidia Nsight Systems or Nvidia Visual Profiler to identify performance bottlenecks in your code. Once you’ve pinpointed these bottlenecks, you can apply targeted optimizations, such as parallelizing CPU-bound tasks, reducing memory transactions, or applying kernel optimizations.

7. Apply Mixed Precision Training

Mixed precision training is a technique that leverages the H100’s ability to perform certain calculations in lower precision (e.g., float16) while maintaining the accuracy of full precision (float32) for critical parts of the computation. This approach can significantly speed up AI model training without compromising model accuracy. Tools like Nvidia’s Automatic Mixed Precision (AMP) can simplify the process of integrating mixed precision into your training workflows.

8. Configure Cooling Systems for Optimal Thermal Performance

The performance of the H100, like many high-end GPUs, can be thermally limited. Ensuring that your system’s cooling solution is adequately configured to keep the GPU at a safe temperature can help maintain peak performance. Consider investing in a high-quality cooling system and monitor your GPU’s temperatures during intense workloads to identify any potential thermal bottlenecks.

9. Update Drivers and Firmware Regularly

Nvidia regularly releases driver updates that include performance optimizations, bug fixes, and support for new features. Keeping your drivers up to date can provide noticeable performance improvements, especially for newly released applications or those that heavily utilize the latest GPU technologies. Also, ensure that your GPU’s firmware is current, as updates can address issues related to stability, security, and performance.

10. Monitor Performance Metrics and Adjust

Finally, monitoring your application’s and system’s performance metrics is key to identifying areas for improvement. Use tools like Nvidia’s Datacenter GPU Monitoring to track performance indicators such as GPU utilization, memory bandwidth, and power consumption. Analyzing these metrics can help you pinpoint inefficiencies and make informed decisions about how to optimize your workloads for the best possible performance on the H100.

By implementing these strategies, you can unlock the full potential of the Nvidia H100, achieving faster performance, higher throughput, and greater efficiency in your compute-intensive applications.

What are the key benefits of using Nvidia’s Tensor Cores for AI workloads?

+The Tensor Cores in the Nvidia H100 are designed to accelerate matrix multiplication, which is a fundamental operation in deep learning and AI. They offer significant performance boosts for AI workloads by performing these operations at much higher speeds and lower power consumption compared to traditional CUDA cores. This results in faster AI model training times and improved overall system efficiency.

How does the Multi-Instance GPU (MIG) feature enhance resource allocation on the H100?

+MIG allows the H100 to be divided into multiple independent GPU instances, each with its own set of resources, including CUDA cores, memory, and Tensor Cores. This feature improves resource utilization by enabling multiple applications or users to share the GPU simultaneously without compromising performance. Each instance can be configured to meet the specific requirements of the workload, ensuring that resources are allocated efficiently and reducing idle time.